Home »

Computer Science Organization

Computer Science Organization | Mapping Techniques

In this article, we will learn about the mapping techniques of the cache memory in Computer Science Organization.

Submitted by Shivangi Jain, on July 17, 2018

Mapping techniques

The process of transfer the data from main memory to cache memory is called as mapping. In the cache memory, there are three kinds of mapping techniques are used.

- Associative mapping

- Direct mapping

- Set Associative mapping

Components present in each line are:

- Valid bit: This gives the status of the data block. If 0 then the data block is not referenced and if 1 then the data block is referenced.

- Tag: This is the main memory address part.

- Data: This is the data block.

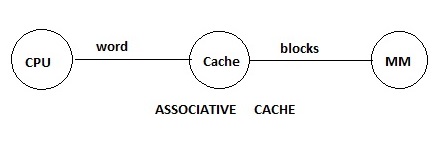

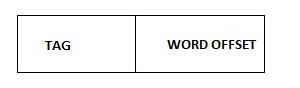

1) Associative mapping

In this technique, a number of mapping functions are used to transfer the data from main memory to cache memory. That means any main memory can be mapped into any cache line. Therefore, cache memory address is not in the use. Associative cache controller interprets the request by using the main memory address format. During the mapping process, the complete data block is transferred to cache memory along with the complete tags.

- Associative cache controller interprets the CPU generated request as:

- The existing tag in the cache controller compared with the CPU generated tags.

- If anyone of the tag in the matching operation becomes hit. So, based on the word offset the respective data is transfer to CPU.

- If none of the tags are matching operation become miss. So, the references will be forwarded to the main memory.

- According to the main memory address format, the respective main memory block is enabled then transferred to the cache memory by using the associative mapping. Later the data will be transfer to the CPU.

- In this mapping technique, replacement algorithms are used to replace the cache block when the cache is full.

- Tag memory size = number of lines * number of tag bits in the line.

Tag memory size = 4*3 bits

Tag memory size = 12 bits

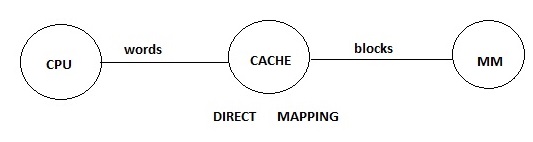

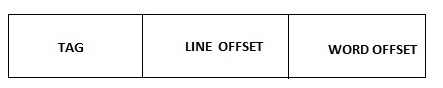

2) Direct mapping

In this mapping technique, the mapping function is used to transfer the data from main memory to cache memory. The mapping function is:

K mod N = i

Where,

- K is the main memory block number.

- N is the number of cache lines.

- And, i is the cache memory line number.

- Direct cache controller interprets the CPU generated a request as:

- Line offset is directly connected to the address logic of the cache memory. Therefore the corresponding cache line is enabled.

- Existing tag in the enabled cache line is compared with the CPU generated the tag.

- If both are matching operation then it becomes hit. So, the respective data is transfer to CPU based on the word offset.

- If both are not matching operation then it becomes a miss. So, the reference is forward into the main memory.

- According to the main memory address format, the corresponding block is enabled, then transferred to the cache memory by using the direct mapping function. Later the data is transfer to the CPU based on the word offset.

- In this mapping technique, a replacement algorithm is not required because the mapping function itself replaces the blocks.

- The disadvantage of direct mapping is each cache line is able to hold only one block at a time. Therefore. The number of conflicts misses will be increased.

- To avoid the disadvantage of the direct mapping, use the alternative cache organization in which each line is able to hold more than one tags at a time. This alternative organization is called as a set associative cache organization.

- Tag memory size = number of lines * number of tag bits in the line.

Tag memory size = 4*1 bits

Tag memory size =4 bits

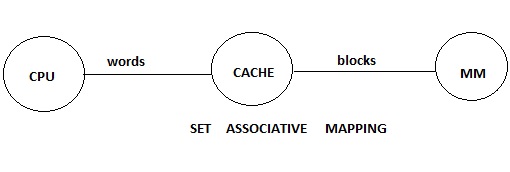

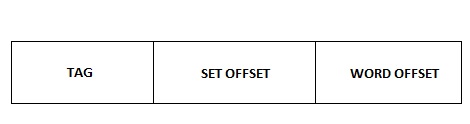

3) Set Associative Mapping

In this mapping technique, the mapping function is used to transfer the data from main memory to cache memory. The mapping function is:

K mod S = i

Where,

- K is the main memory block number,

- S is the number of cache sets,

- And, i is the cache memory set number.

- Set associative cache controller interprets the CPU generated a request as:

- A set offset is directly connected to the address logic of the cache memory. So, respective sets will be enabled.

- Set contain multiple blocks so to identify the hit block there is a need of multiplexer to compare existing tags in the enabled set one after the another based on the selection bit with the CPU generated a tag.

- If anyone is matching, the operation becomes hit. So, the data is transfer to the CPU. If none of them is matching, then operation becomes a miss. So, the reference is forward to the CPU.

- The main memory block is transferred to the cache memory by using a set associative mapping function. Later data is transfer to CPU.

- In this technique, replacement algorithms are used to replace the blocks in the cache line, when the set is full.

- Tag memory size = number of sets in cache * number of blocks in the set * tag bit.

Tag memory size = 2 * 2 * 2 bits.

Tag memory size = 8 bits.