Home »

Data Mining

Data Preprocessing in Data Mining

Data Mining | Data Preprocessing: In this tutorial, we are going to learn about the data preprocessing, need of data preprocessing, data cleaning process, data integration process, data reduction process, and data transformations process.

By Harshita Jain Last updated : April 17, 2023

Introduction

In the previous article, we have discussed the Data Exploration with which we have started a detailed journey towards data mining. We have learnt about Data Exploration, Statistical Description of Data, Concept of Data Visualization & Various technique of Data Visualization.

In this article we will be discussing,

- Need of Data Preprocessing

- Data Cleaning Process

- Data Integration Process

- Data Reduction Process

- Data Transformation Process

1. Need of Data Preprocessing

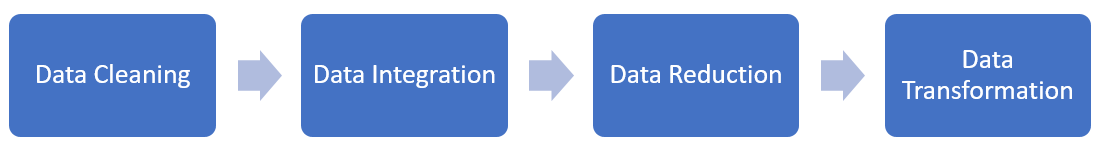

Data preprocessing refers to the set of techniques implemented on the databases to remove noisy, missing, and inconsistent data. Different Data preprocessing techniques involved in data mining are data cleaning, data integration, data reduction, and data transformation.

The need for data preprocessing arises from the fact that the real-time data and many times the data of the database is often incomplete and inconsistent which may result in improper and inaccurate data mining results. Thus to improve the quality of data on which the observation and analysis are to be done, it is treated with these four steps of data preprocessing. More the improved data, More will be the accurate observation and prediction.

Fig 1: Steps of Data Preprocessing

2. Data Cleaning Process

Data in the real world is usually incomplete, incomplete and noisy. The data cleaning process includes the procedure which aims at filling the missing values, smoothing out the noise which determines the outliers and rectifies the inconsistencies in data. Let us discuss the basic methods of data cleaning,

2.1. Missing Values

Assume that you are dealing with any data like sales and customer data and you observe that there are several attributes from which the data is missing. One cannot compute data with missing values. In this case, there are some methods which sort out this problem. Let us go through them one by one,

2.1.1. Ignore the tuple:

If there is no class label specified then we could go for this method. It is not effective in the case if the percentage of missing values per attribute changes considerably.

2.1.2. Enter the missing value manually or fill it with global constant:

When the database contains large missing values, then filling manually method is not feasible. Meanwhile, this method is time-consuming. Another method is to fill it with some global constant.

2.1.3. Filling the missing value with attribute mean or by using the most probable value:

Filling the missing value with attribute value can be the other option. Filling with the most probable value uses regression, Bayesian formulation or decision tree.

2.2. Noisy Data

Noise refers to any error in a measured variable. If a numerical attribute is given you need to smooth out the data by eliminating noise. Some data smoothing techniques are as follows,

2.2.1. Binning:

- Smoothing by bin means: In smoothing by bin means, each value in a bin is replaced by the mean value of the bin.

- Smoothing by bin median: In this method, each bin value is replaced by its bin median value.

- Smoothing by bin boundary: In smoothing by bin boundaries, the minimum and maximum values in a given bin are identified as the bin boundaries. Every value of bin is then replaced with the closest boundary value.

Let us understand with an example,

Sorted data for price (in dollars): 4, 8, 9, 15, 21, 21, 24, 25, 26, 28, 29, 34

Smoothing by bin means:

- Bin 1: 9, 9, 9, 9

- Bin 2: 23, 23, 23, 23

- Bin 3: 29, 29, 29, 29

Smoothing by bin boundaries:

- Bin 1: 4, 4, 4, 15

- Bin 2: 21, 21, 25, 25

- Bin 3: 26, 26, 26, 34

Smoothing by bin median:

- Bin 1: 9 9, 9, 9

- Bin 2: 24, 24, 24, 24

- Bin 3: 29, 29, 29, 29

2.2.2. Regression:

Regression is used to predict the value. Linear regression uses the formula of a straight line which predicts the value of y on the specified value of x whereas multiple linear regression is used to predict the value of a variable is predicted by using given values of two or more variables.

3. Data Integration Process

Data Integration is a data preprocessing technique that involves combining data from multiple heterogeneous data sources into a coherent data store and supply a unified view of the info. These sources may include multiple data cubes, databases or flat files.

3.1. Approaches

There are mainly 2 major approaches for data integration – one is "tight coupling approach" and another is the "loose coupling approach".

Tight Coupling:

Here, a knowledge warehouse is treated as an information retrieval component.

In this coupling, data is combined from different sources into one physical location through the method of ETL – Extraction, Transformation, and Loading.

Loose Coupling:

Here, an interface is as long as it takes the query from the user, transforms it during away the source database can understand then sends the query on to the source databases to get the result. And the data only remains within the actual source databases.

3.2. Issues in Data Integration

There are not any issues to think about during data integration: Schema Integration, Redundancy, Detection and determination of knowledge value conflicts. These are explained in short as below,

3.1.1. Schema Integration:

Integrate metadata from different sources.

The real-world entities from multiple sources are matched mentioned because of the entity identification problem.

For example, How can the info analyst and computer make certain that customer id in one database and customer number in another regard to an equivalent attribute.

3.2.2. Redundancy:

An attribute could also be redundant if it is often derived or obtaining from another attribute or set of the attribute.

Inconsistencies in attribute also can cause redundancies within the resulting data set.

Some redundancies are often detected by correlation analysis.

3.3.3. Detection and determination of data value conflicts:

This is the third important issues in data integration. Attribute values from another different source may differ for an equivalent world entity. An attribute in one system could also be recorded at a lower level abstraction than the "same" attribute in another.

4. Data Reduction Process

Data warehouses usually store large amounts of data the data mining operation takes a long time to process this data. The data reduction technique helps to minimize the size of the dataset without affecting the result. The following are the methods that are commonly used for data reduction,

- Data cube aggregation

Refers to a method where aggregation operations are performed on data to create a data cube, which helps to analyze business trends and performance.

- Attribute subset selection

Refers to a method where redundant attributes or dimensions or irrelevant data may be identified and removed.

- Dimensionality reduction

Refers to a method where encoding techniques are used to minimize the size of the data set.

- Numerosity reduction

Refers to a method where smaller data representation replaces the data.

- Discretization and concept hierarchy generation

Refers to methods where higher conceptual values replace raw data values for attributes. Data discretization is a type of numerosity reduction for the automatic generation of concept hierarchies.

In data transformation process data are transformed from one format to a different format, that's more appropriate for data processing.

Some Data Transformation Strategies,

- Smoothing

Smoothing may be a process of removing noise from the info.

- Aggregation

Aggregation may be a process where summary or aggregation operations are applied to the info.

- Generalization

In generalization, low-level data are replaced with high-level data by using concept hierarchies climbing.

- Normalization

Normalization scaled attribute data so on fall within a little specified range, such as 0.0 to 1.0.

- Attribute Construction

In Attribute construction, new attributes are constructed from the given set of attributes.

Advertisement

Advertisement