Home »

Big Data Analytics

Hadoop YARN Architecture: Explained with Features Key Components

Hadoop YARN Architecture: In this tutorial, we will learn about the Hadoop YARN Architecture, Functioning of key components, and YARN Architecture Features.

By IncludeHelp Last updated : July 16, 2023

Hadoop YARN Architecture

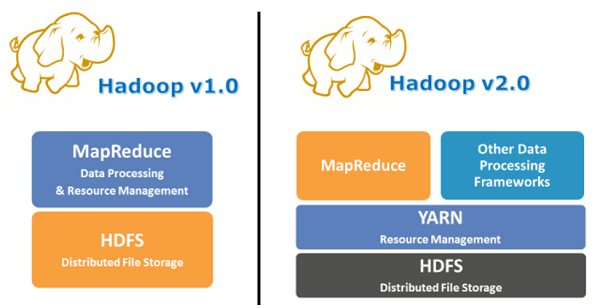

Yet Another Resource Negotiator (YARN), as the name implies, YARN is a component that assists in the management of the resources that are distributed throughout the clusters. In a nutshell, it is responsible for the scheduling and distribution of resources for the Hadoop System. YARN allows Hadoop to support distributed applications other than MapReduce.

YARN's basic concept is to have distinct daemons handle resource management and job scheduling/monitoring. The concept is to separate application-specific ResourceManagers from a global ResourceManager (RM). There can be one job per application, or a DAG of jobs.

Using a wide range of technologies, including Spark for real-time processing, Hive for SQL, Hbase for NoSQL, and others, YARN allowed users to carry out operations as needed. YARN is capable of Job Scheduling in addition to Resource Management. YARN does everything, from assigning resources to scheduling.

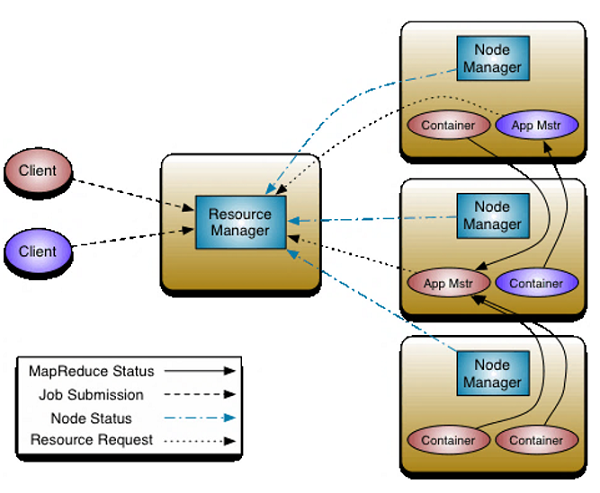

Fig: YARN Architecture

The YARN architecture is depicted in the diagram above. The architecture is made up with different key components, including Resource Management, Node Management, Containers, and an Application Master. These components collaborate to bring the YARN architecture to existence. The Resource Manager and Node Manager are at the heart of YARN. These two components are playing a vital role in scheduling and managing Hadoop tasks on the cluster. The Node Manager is responsible for assigning tasks to the cluster's data nodes. Based on the hardware characteristics of each node while the Node Manager determines which nodes will perform the jobs. The Resource Manager sends task requests to the Node Manager. The jobs will subsequently be distributed to the cluster's nodes via the Node Manager.

Functioning of Key Components

1. Resource Manager

Runs on a master server and handles how the cluster's resources are shared out. Its job is to accept job requests and start a container for something called the ApplicationMaster. Also, if the ApplicationMaster container fails, it starts it up again. The data-computation system is made up of the ResourceManager and the NodeManager. The ResourceManager is the most important part of the system because it decides how resources should be shared among all the apps.

It has two major components:

- Scheduler

- Application Manager

(a) Scheduler

The scheduler has the responsibility for giving resources to the different programs that are running, within the limits of capacity, queues, etc. In Resource Manager, it is called a "pure scheduler," which means that it does not keep track of the state of the apps or watch them in any way. If an application or hardware component fails, the Scheduler does not promise that the failed jobs will be started up again. It schedules tasks based on the resources that each program needs. It comes with a pluggable policy plug-in that divides the cluster's resources among the different apps. There are two of these plug-ins, called Capacity Scheduler and Fair Scheduler, which are used as Schedulers in ResourceManager.

(b) Application Manager

Application managers generally accept jobs submitted for execution. It plays a central role in managing the lifecycle of applications running on the cluster. The followings are the key role of the Application Manager:

- Application Submission: Clients or users send their applications to the Application Manager. It checks and confirms the application's dependencies and resource needs.

- Resource Negotiation: The Application Manager negotiates with the Resource Manager in order to acquire the required application or resources. It conveys the resource requirements and ensures that the resources are allocated in accordance with the application's requirements.

- Application Scheduling: The Application Manager works closely with the Scheduler to put application jobs on cluster resources that are available. It identifies availability of resources, policies, and the order of applications into account when making scheduling choices.

- Application Monitoring: The Application Manager keeps an eye on progress and status of running applications. It collects metrics, keeps track of how resources are used, and tells the client/user what the state of the service is.

- Application Coordination: The Application Manager makes sure that application jobs are done in a coordinated way across the cluster. It connects with Node Managers to start, monitor, and manage application containers.

- Fault Tolerance and Recovery: The Application Manager manages failure and tries to fix application tasks that have failed. It makes sure that failed tasks are rescheduled or restarted to maintain application's reliability.

2. Node Manager

Node Manager is responsible for the execution of the task in each data node. The Node Manager in YARN by default sends a heartbeat to the Resource Manager which carries the information about the running containers and the availability of resources for the new containers. The followings are the key responsibilities of the Node Manager:

- Resource Management: The Node Manager is responsible for managing the physical resources (CPU, memory, disk, etc.) available on a node. It communicates with ResourceManager to know about resource availability on the node and to change the resource state.

- Container Execution: The Node Manager manages containers on behalf of the ResourceManager. It monitors the status and resource consumption of each container and notifies the ResourceManager of any faults or completions.

- Security and Container Isolation: The Node Manager ensures that containers operating on a node are secure and isolated. It enforces resource limits and utilisation policies specified by the cluster administrator to prevent a single application from dominating system resources. It also enforces container isolation by limiting the resources accessible to each container via various Linux kernel mechanisms, such as cgroups.

3. Application Master

It works along with the Node Manager and monitors the execution of tasks. It maintains a registry of running applications and monitors their execution. Whenever a job is submitted to the framework, an Application Master is elected for it. It monitors the execution of tasks and also manages the lifecycle of applications running on the cluster. An individual Application Master gets associated with a job when it is submitted to the framework. Its chief responsibility is to negotiate the resources from the Resource Manager. It works with the Node Manager to monitor and execute the tasks.

- In order to run an application through YARN, the below steps are performed.

- The client contacts the Resource Manager which requests to run the application process i.e. it submits the YARN application.

- The next step is that the Resource Manager searches for a Node Manager which will, in turn, launch the Application Master in a container.

- The Application Master can either run the execution in the container in which it is running currently or provide the result to the client or it can request more containers from resource manager which can be called distributed computing.

- The client then contacts the Resource Manager to monitor the status of the application.

4. Container

It is an aggregation of physical resources on a single node, such as RAM, CPU processors, and discs. A container launch context that is container life-cycle (CLC) manages YARN containers. This record contains a map of environment variables, stored dependencies in remotely accessible storage, security tokens, the payload for Node Manager Services, and the necessary command to start the process. It grants application permission to use a certain number of resources (memory, CPU, etc.) on a particular host.

YARN Architecture Features

The following are the main features of YARN architecture:

- Scalability: YARN allows managing large clusters with thousands of nodes. It can efficiently manage and allocate resources to accommodate the growing demands of big data solutions.

- Resource Management: YARN separates the functions of resource management and job scheduling, making it more flexible and extensible. YARN also supports dynamic allocation of resources to applications based on their needs.

- Fault-tolerance: YARN has built-in mechanisms for defect tolerance to assure the reliability of application execution. It can recover from faults automatically by restarting failed components or rescheduling tasks on different nodes. This contributes to the maintenance of high availability and guarantees the uninterrupted operation of applications.

- Security: YARN provides security features to protect the cluster and the operating applications. It supports Kerberos and Hadoop Access Control Lists (ACLs) as authentication and authorization mechanisms. It also enables secure communication between various YARN architecture components.

- Application Isolation: Each application operates in its own container with isolated resources due to YARN's containerization capabilities. This isolation prevents applications from interfering with one another and enables efficient utilisation of cluster resources.

- Pluggable Schedulers: Different scheduling algorithms can be inserted into YARN based on the requirements of the applications. It provides a pluggable architecture that enables the use of custom or third-party schedulers, providing flexibility and adaptability to various workload scenarios.

- Monitoring and Metrics: YARN provides monitoring and metrics capabilities to track the resource usage, performance, and health of applications and the cluster. It offers various APIs and tools for collecting and analyzing application-level and system-level metrics, allowing administrators to optimize resource allocation and troubleshoot issues.

Why YARN became popular?

YARN has became popular for the following reasons –

- With the scalability of the resource manager of the YARN architecture, Hadoop may manage thousands of nodes and clusters.

- YARN compatibility with Hadoop 1.0 is maintained by not affecting map-reduce applications.

- Dynamic utilization of clusters in Hadoop is facilitated by YARN, which gives better cluster utilization.

- Multi-tenancy enables an organization to gain the benefits of multiple engines at once.

Advertisement

Advertisement