Home »

Operating System

Cache Memory Performance and Its Design

Last Updated : December 30, 2025

Prerequisite: Cache Memory and its levels

Cache Performance

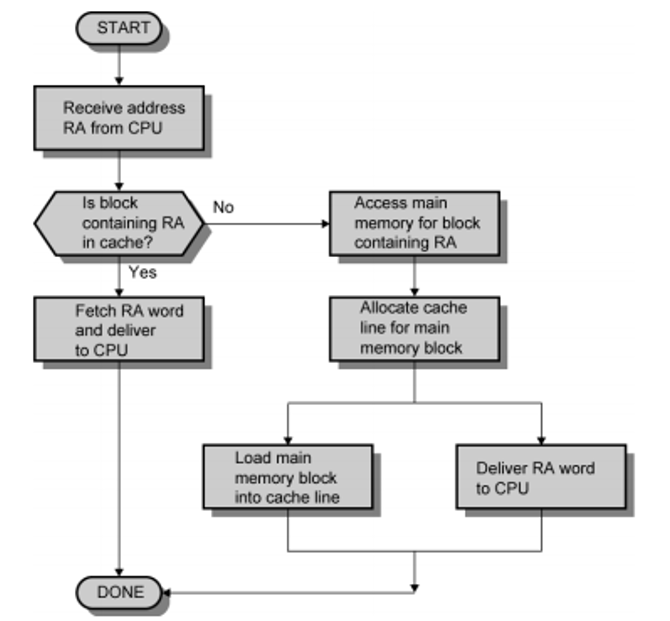

- When the CPU needs to read or write a location in the main memory that is any process requires some data, it first checks for a corresponding entry in the cache.

- If the processor finds that the memory location is in the cache and the data is available in the cache, this is referred to as a cache hit and data is read from the cache.

- If the processor does not find the memory location in the cache, this is referred to as a cache miss. Due to a cache miss, the data is read from the main memory. For this cache allocates a new entry and copies data from main memory, by assuming that data will be needed again.

The performance of cache memory is measured in a term known as "Hit ratio".

Hit ratio = Cache hit / (Cache hit + Cache miss)

= Number of Cache hits/total accesses

We can improve the performance of Cache using higher cache block size, higher associativity, reduce miss rate, reduce miss penalty, and reduce the time to hit in the cache.

Cache Memory Design

Cache Memory design represents the following categories: Block size, Cache size, Mapping function, Replacement algorithm, and Write policy. These are as follows,

Cache Read Operation

1. Block Size

- Block size is the unit of information changed between cache and main memory. On the storage system, all volumes share the same cache space, so that, the volumes can have only one cache block size.

- As the block size increases from small to larger sizes, the cache hit magnitude relation increases as a result of the principle of locality and a lot of helpful data or knowledge can be brought into the cache.

- Since the block becomes even larger, the hit magnitude relation can begin to decrease.

2. Cache Size

- If the size of the cache is small it increases the performance of the system.

3. Mapping Function

- Cache lines or cache blocks are fixed-size blocks in which data transfer takes place between memory and cache. When a cache line is copied into the cache from memory, a cache entry is created.

- There are fewer cache lines than memory blocks that are why we need an algorithm for mapping memory into the cache lines.

- This is a means to determine which memory block is in which cache lines. Whenever a cache entry is created, the mapping function determines that the cache location the block will occupy.

- There are two main points that are one block of data scan in and another could be replaced.

For example, cache is of 64kb and cache block of 4 bytes i,e. cache is 16k lines of cache.

4. Replacement Algorithm

- If the cache already has all slots of alternative blocks are full and we want to read a line is from memory it replaces some other line which is already in cache. The replacement algorithmic chooses, at intervals, once a replacement block is to be loaded into the cache then which block to interchange. We replace that block of memory is not required within the close to future.

- Policies to replace a block are the least-recently-used (LRU) algorithm. According to this rule most recently used is likely to be used again. Some other replacement algorithms are FIFO (First Come First Serve), least-frequently-used.

5. Write Policy

- If we want to write data to the cache, at that point it must also be written to the main memory and the timing of this write is referred to as the write policy.

- In a write-through operation, every write to the cache follows a write to main memory.

- In a write-back operation or copy-back cache operation, writes are not immediately appeared to the main memory that is writing is done only to the cache and the cache memory tracks the locations have been written over, marking them as dirty.

- These locations contain some data that is written back to the main memory only and that data is removed from the cache.

References

Advertisement

Advertisement